There are many scripts in the world. In this article the word script means the system of writing, alphabets, characters; it does not mean VB Script or Java Script…

Scripts

A script is a a set of alphabet and its characters used for writing textual content. http://en.wikipedia.org/wiki/ISO_15924 has the list of scripts standardized by ISO. The scripts relate to languages in many-to-many correspondence.

We have many examples of one script used to write different languages. E.g.

- Latin Script (ISO:LATN) is used to write English, French, German etc.

- Arabic Script (ISO:ARAB) is used to write Arabic, Farsi, Urdu, Pashto etc.

- Devanagari Script (ISO:DEVA) is used to write Sanskrit, Hindi, Marathi, Konkani, Nepali etc.

There are languages which can be written in different scripts. E.g.

- Panjabi in India is written is Gurumukhi Script (ISO:GURU), but is written in Arabic Script (ISO:ARAB) in Pakistan.

- Serbian is written in Latin Script (ISO:LATN) or Cyrillic (ISO:CYRL) depending upon the geographical area or preference.

- English which is generally written in Latin Script (ISO:LATN), is also written in Braille Script (ISO:BRAI).

One should consciously think about a script as a way of representing the language without speaking.

History

In early 1960s to 1980s, computers were almost exclusively used by scientific and engineering community for heavy calculations. A unit of storage in computers is a byte, which consists of 8 bits. Each bit can be either ON or OFF, represented as 1 or 0. In a set of 8 bits, i.e. a byte, 256 numbers can be represented, which are the possible combinations of 1s 0s. This is basically called as Binary Numeral System. For excellent information on Binary Numeral system, please refer to the Wikipedia Article http://en.wikipedia.org/wiki/Binary_numeral_system . In the earlier computers, there were no display screens or printers, the computer used a series of lights (On or OFF) to display the computer answer.

People were not happy with just numbers and blinking lights but electric typewriters and printers were available. Computer folks came up with the concept of representing characters with numbers. They designed the first encodings and the encoding called ASCII was adopted widely. ASCII used 7 bits i.e. 128 possible numbers and mapped characters to them, e.g. character ‘A’ was represented by number 65 and so on. Then they wrote computer instructions to print the shape of character ‘A’ whenever the binary number 65 came up. The 128 available characters encoded all the characters and punctuation required by English and some non-printable character which were actually commands to the printers e.g. 10 meant “advance to next line”, 12 meant “advance to next page” etc.. The first goal was to be able to print business information (bank statements, inventory information, invoices etc.) and the programming instructions (code) written in programming languages like FORTRAN, COBOL, BASIC etc. Later display screens were developed and the printer technology was extended to these displays, so the shapes of characters started showing up on monitor screens.

In Western Europe, French, German, Spanish languages were widely used and ASCII was extended to 8 bits (Extended ASCII) allowing 256 possible values, and that took care of the alphabet of the languages of Western Europe i.e., French, German, Spanish etc. People in Greece and Russia and other countries started to use computers and found out that they needed place for their characters, so they started using some characters from the 256 to represent characters specific to Greek or Russian. These maps are called Code Pages; please refer to https://en.wikipedia.org/wiki/Code_page . Soon other languages joined the party and wanted their share of characters in the available 256.

So there came a situation when, depending upon how you looked at it, the character code number say, 195 represented

- Thai letter “Ro Rua” ร

- Greek letter capital “gamma” Γ

- Arabic letter “Alef with hamza below” إ

- …

and one could not reliably tell what the text was unless one knew in advance, what script (alphabet) one was trying to read.

And then the Japanese, Korean and Chinese alphabets entered the fray bringing with them the thousands of letters of their alphabet…

So, over the years people of the world came together and created the Unicode standard where every character got its own unique value. Of course, they could not fit everything in 256 characters so they decided to look at 2 bytes i.e., 16 bits together giving a possible range of 65536 characters and all scripts get their own space. With Unicode, all the data can now coexist in one file or document or communication without being misinterpreted.

Since Unicode took up twice the space, people did not adopt it easily. Those who had data in only one language and never sent in internationally did not care, and kept on using the single byte systems. In the last 10 years or so, the cost of data storage both in RAM and on disk has come down, and communication speed has increased so the data size being twice does not matter anymore. UTF8 is a popular format (called UTF8 encoding) for storing and sharing Unicode text so that ASCII codes remain the same.

Unicode is neither a linguistic standard not a phonetic standard. It standardizes scripts.

Recently, it was acknowledged that 65536 characters were not enough for all scripts of the world, so now Unicode has extended itself to be 32 bits by adding surrogate characters, allowing over 4 billion characters.

Transliteration

Generally, languages are written in the traditionally standardized scripts. For example, the traditional way to write the word “knowledge” is using LATIN script, but one can write “नॉलेज” in Devanagari or “నోలేజ్” in Telugu, the pronunciation will be almost the same. Transliteration is writing of a language in the script which is not traditionally used for the language. Many text messages are written transliterated in LATIN script because of the universal acceptance of LATIN characters. E.g., It is common send a text message of the greeting “Namaste” in LATIN in India, though traditionally it would be written in Devanagari as “नमस्ते”.

Fonts

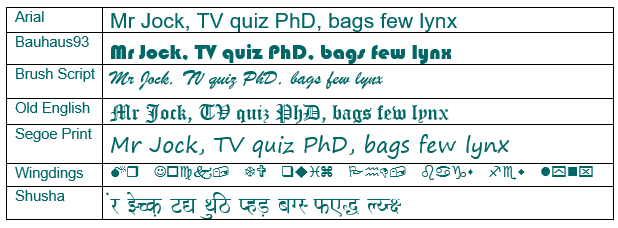

Fonts are data files that define visual shapes of characters. Here is an example of the same text in different fonts.

The last two examples are interesting, because they show Latin script text (i.e. English text) in practically unreadable form using the font Wingdings and Shusha. During the days before Unicode, fonts were created for 256 places of characters (Code Page) with the idea of Wingdings and the font designers put different looking shapes for Latin characters and it looked like a different alphabet. This is referred to as “Font based encoding”, which, in other words, means that you cannot read the text unless you have the font to go with it. If you do not have the font, the data will look like gibberish text.

If the data is saved in Unicode, the data retains its identity even if the font does not have a visual representation of the character. In such cases a rectangular box is displayed on the screen. However, if you are seeing Question Marks instead of characters, it means either that the text was not originally written in Unicode, but was converted to Unicode using the wrong Code Page, or the program which is displaying the text is not Unicode compliant, and uses Code Pages.

Unicode Consortium’s web site is http://www.unicode.org and Wikipedia page is https://en.wikipedia.org/wiki/Unicode , please visit them to get a better understanding of Unicode, better and more “official” than what I have summarized in a few paragraphs above.